SESSION 1

SD&A Invited Session

Moderator: Gregg Favalora, The Charles Stark Draper Laboratory, Inc. (United States)

Session Chair: Nicolas Holliman, University of Newcastle (United Kingdom)

|

Mon. 10:00 - 11:15 * |

10:00am:

Conference Introduction  |

|

SD&A Invited Presenter 1

|

10:15 *

|

|

Looking back at a wonderful decade shooting live-action 3D

Demetri Portelli, I.A.T.S.E. International Cinematographer's Guild (Canada) [SD&A-049]

Invited speaker Demetri Portelli is known for his live action stereography. He has more than 25 years of technical camera experience as a member of the IATSE, the International Cinematographer's Guild. He is also a camera operator and a technician. He was trained in fine arts, worked extensively in the theatre growing up, taught himself cameras with Super8mm, 16mm, and 35mm shorts and music videos before his professional career started. In his role as stereographer and stereo supervisor, Portelli is hands-on during shooting and post-production. He is extremely precise executing stereo on-the-fly, with IO adjustments to 'bake-in' the best possible 3D depth, volumetric shape, and audience proximity. Working alongside the director and cinematographer, his goal is always to design a truly engaging 3D experience from depth planning to shot conception, and through post-production with VFX stereo comps, final geometry checks, and creative convergence placement during the grade.

Demetri Portelli is known for his live action stereography. He has over 25 years of technical camera experience as a member of the IATSE, the International Cinematographer's Guild. He is also a camera operator and a technician. He was trained in fine arts, worked extensively in the theatre growing up, taught himself cameras with Super8mm, 16mm and 35mm shorts and music videos before his professional career started.

In his role as stereographer and stereo supervisor, Demetri is hands-on during shooting and post-production. He is extremely precise executing stereo on-the-fly, with IO adjustments to 'bake-in' the best possible 3D depth, volumetric shape, and audience proximity. Working alongside the director and cinematographer, his goal is always to design a truly engaging 3D experience from depth planning to shot conception and through post-production with VFX stereo comps, final geometry checks and creative convergence placement during the grade.

|

|

|

|

|

|

SD&A Invited Presenter 2

|

10:45 *

|

|

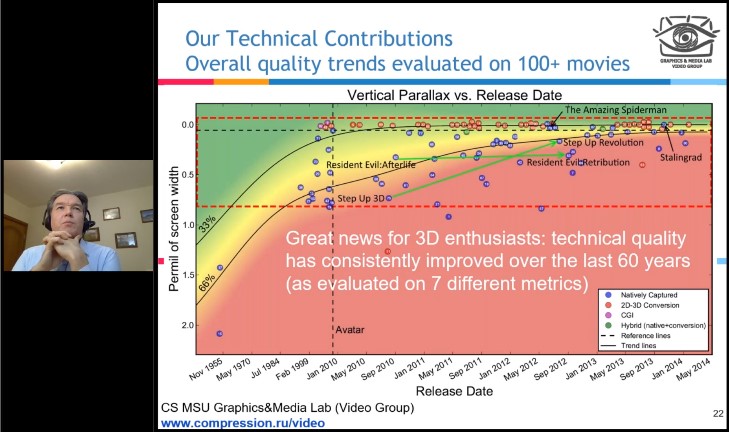

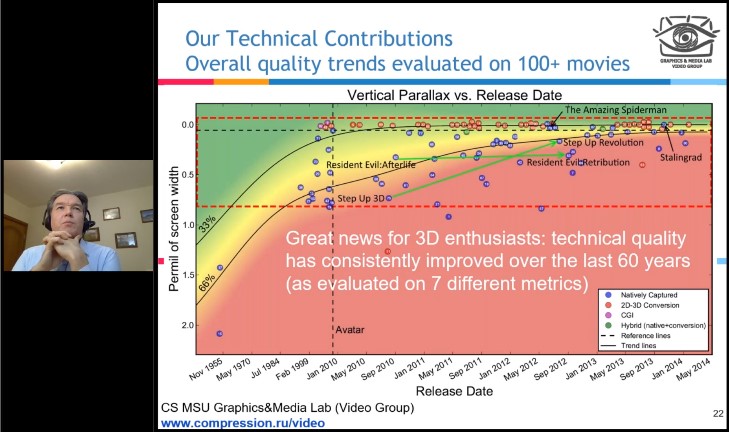

When 3D headache will be over: A decade of movie quality measurements

Dmitriy Vatolin, Lomonosov Moscow State University (Russian Federation) [SD&A-050]

|

Dmitriy Vatolin received his PhD from Moscow State University (2000) and is currently head of the Video Group at the CS MSU Graphics & Media Lab. His research interests include compression methods, video processing, and 3D-video techniques (depth from motion, focus and other cues, video matting, background restoration, and high-quality stereo generation), as well as 3D-video quality assessment (metrics for 2D-to-3D-conversion artifacts, temporal asynchrony, swapped views, and more). He is a chief organizer of the VQMT3D project for 3D-video quality measurement.

|

|

|

|

|

| Session Break |

Mon. 11:15 - 11:45 |

SESSION 2

Stereoscopic Developments

Moderator: Nicolas Holliman, University of Newcastle (United Kingdom)

Session Chair: Gregg Favalora, The Charles Stark Draper Laboratory, Inc. (United States)

|

Mon. 11:45 am - 12:45 pm * |

11:45:

Light field rendering for non-Lambertian objects, Sarah Fachada1, Daniele Bonatto

1,2, Mehrdad Teratani

1, and Gauthier Lafruit

1;

1Universite Libre de Bruxelles and

2Vrije Universiteit Brussel (Belgium) [SD&A-054]

12:05: Custom on-axis head-mounted eye tracker for 3D active glasses, Vincent Nourrit1, Rémi Poilane2, and Jean-Louis de Bougrenet de la Tocnaye1; 1IMT Atlantique Bretagne-Pays de la Loire - Campus de Brest and 2E3S (France) [SD&A-055]

12:25: Immersive design engineering, Part 2 - The review, Bjorn Sommer, Chang Lee, Nat Martin, and Savina Toirrisi, Royal College of Art (United Kingdom) [SD&A-056]

SESSION 3

Stereoscopic Displays, Cameras, and Algorithms

Moderator: Gregg Favalora, The Charles Stark Draper Laboratory, Inc. (United States)

Session Chair: Nicolas Holliman, University of Newcastle (United Kingdom)

|

Mon. 13:15 - 14:35 * |

13:15:

Near eye mirror anamorphosis display, Kedrick Brown, Lightscope Media LLC (United States) [SD&A-057]

13:35: Hybrid stereoscopic photography - Two vintage analogue cameras meet the digital age, Bjorn Sommer, Royal College of Art (United Kingdom) [SD&A-058]

13:55: A new hybrid stereo disparity estimation algorithm with guided image filtering-based cost aggregation, Hanieh Shabanian and Madhusudhanan Balasubramanian, The University of Memphis (United States) [SD&A-059]

14:15: Stereoscopic quality assessment of 1,000 VR180 videos using 8 metrics, Dmitriy Vatolin, Lomonosov Moscow State University (Russian Federation) [SD&A-350]

| Session Break |

Mon. 14:15 - 18:00 |

SESSION 4

Autostereoscopic Displays

Moderator: Andrew Woods, Curtin University (Australia)

Session Chair: Takashi Kawai, Waseda University (Japan)

|

Mon. 18:00 - 19:15 * |

18:00:

Conference Introduction

18:15: Holographic display utilizing scalable array of edge-emitting SAW modulators, Gregg Favalora, Michael Moebius, John LeBlanc, Valerie Bloomfield, Joy Perkinson, James Hsiao, Sean O'Connor, Dennis Callahan, William Sawyer, Francis Rogomentich, and Steven Byrnes, The Charles Stark Draper Laboratory, Inc. (United States) [SD&A-010]

18:35: Towards AO/EO modulators in lithium niobate for dual-axis holographic displays, Mitchell Adams, Caitlin Bingham, and Daniel Smalley, Brigham Young University (United States) [SD&A-011]

18:55: Enhancing angular resolution of layered light-field display by using monochrome layers, Kotaro Matsuura, Keita Takahashi, and Toshiaki Fujii, Nagoya University (Japan) [SD&A-012]

| Session Break |

Mon. 19:15 - 19:45 |

SD&A Keynote 1

Moderator: Takashi Kawai, Waseda University (Japan)

Session Chair: Andrew Woods, Curtin University (Australia)

|

Mon. 19:45 - 20:45 *

|

|

Underwater 3D system for ultra-high resolution imaging

Pawel Achtel, Achtel Pty Limited (Australia) [SD&A-029]

|

Cinematographer and inventor, keynote speaker Pawel Achtel, ACS, will explain some of the challenges we face in 3D underwater cinematography and how his breakthrough innovation made it to the set of one of the most anticipated Hollywood blockbuster movies. Underwater flat ports and dome ports, a compromise we've all come to expect and live with since the advent of underwater photography, substantially limit underwater image quality as camera resolutions increased. Additional constraints on the size of the underwater optics used in Stereoscopic 3D setups further impacted the quality of images down to approximately standard definition levels. Submersible lenses are now the industry's gold standard and combined with Achtel's invention, a submersible beam-splitter, allow images so much in advance of other solutions that the results are as good as or even better as those achieved on land. Achtel uses his scientific approach where almost every aspect of image quality is meticulously measured, compared, and ultimately improved. This leads to measurable outcomes that can be objectively quantified. Achtel also holds a Master's degree in engineering, which allowed him to design one of the most advanced underwater 3D system ever built. His company's patented 3D beam-splitter was recently used extensively on James Cameron's latest Avatar sequels, in New Zealand, prompting the legendary Hollywood director to write that the results were the best underwater 3D images he'd ever seen, by far. Indeed, his underwater 3D system is capable of resolving 8K corner-to-corner measured resolution on screen and without any distortions, aberrations, or image plane curvature. It is the world's first. Achtel will share some of his insights on what to expect from underwater images when Avatar 2 hits the screens in 2022.

|

|

|

|

|

EI Plenary

Session Chair: Charles Bouman, Purdue University (United States) |

10:00 - 11:10

|

|

Deep Internal Learning - Deep Learning with Zero Examples

Michal Irani,

Professor in the Department of Computer Science and Applied Mathematics at the Weizmann Institute of Science (Israel)

In this talk, Prof. Irani will show how complex visual inference tasks can be performed with deep learning, in a totally unsupervised way, by training on a single image - the test image itself. The strong recurrence of information inside a single natural image provides powerful internal examples, which suffice for self-supervision of deep networks, without any prior examples or training data. This new paradigm gives rise to true "Zero-Shot Learning". She will show the power of this approach to a variety of problems, including super-resolution, image-segmentation, transparent layer separation, image-dehazing, image-retargeting, and more. Additionally, Prof. Irani will show how self-supervision can be used for "Mind-Reading" from very little fMRI data.

Michal Irani is a professor at the Weizmann Institute of Science. Her research interests include computer vision, AI, and deep learning. Irani's prizes and honors include the Maria Petrou Prize (2016), the Helmholtz "Test of Time Award" (2017), the Landau Prize in AI (2019), and the Rothschild Prize in Mathematics and Computer Science (2020). She also received the ECCV Best Paper Awards (2000 and 2002), and the Marr Prize Honorable Mention (2001 and 2005). |

|

|

| Session Break |

11:10 - 11:40 |

Other Electronic Imaging Symposium events.

| Session Break |

14:30 - 18:00 |

SESSION 5

Stereoscopic Content and Quality

Moderator: Andrew Woods, Curtin University (Australia)

Session Chair: Takashi Kawai, Waseda University (Japan)

|

Tue. 18:00 - 19:15 * |

18:00

Conference Introduction

18:15: Evaluating user experience of different angle VR images, Yoshihiro Banchi and Takashi Kawai, Waseda University (Japan) [SD&A-098]

18:35: JIST-first: Crosstalk minimization method for eye-tracking based 3D display, Seok Lee, Juyong Park, and Dongkyung Nam, Samsung Advanced Institute of Technology (Republic of Korea) [SD&A-099]

18:55: Sourcing and qualifying passive polarised 3D TVs, Andrew Woods, Curtin University (Australia) [SD&A-100]

SESSION 6

Conference Interactive Posters and Demonstrations |

19:15 - 19:45 * |

ISS-072

ISS POSTER:

Estimation of any fields of lens PSFs for image simulation, Sangmin Kim1, Daekwan Kim1, Kilwoo Chung1, and JoonSeo Yim2; 1Samsung Electronics Device Solutions and 2Samsung Electronics (Republic of Korea)

SD&A-100D

SD&A DEMO:

Sourcing and qualifying passive polarised 3D TVs, Andrew Woods, Curtin University (Australia)

SD&A Keynote 2

Moderator: Takashi Kawai, Waseda University (Japan)

Session Chair: Andrew Woods, Curtin University (Australia)

|

Mon. 19:45 - 20:45 *

|

|

Digital Stereoscopic Microscopy

Michael Weissman and Michael Teitel,

SB3D Technologies, Inc. (United States) [SD&A-088]

|

In this address, we consider an important application of digital stereoscopic imaging, stereoscopic microscopy, and its limitations. As an overview of the field, we will first cover some history of the stereomicroscope itself, including a review of the various optical designs and the advent of video and digital cameras. Then we will explore a major limitation of the stereomicroscope for UHD (4K and beyond) electronic imaging.

Stereomicroscopes have been around for a long time. The first one was built in the 1670's, but major advances awaited a better understanding of stereoscopic vision, which came in the 1800s by Wheatstone and others. Two main designs evolved: the "Greenough" and the "Common Main Objective" or "CMO".

Video cameras were added in the mid-20th century, first by simply replacing the eyepieces, then by the addition of "ports" for the two optical channels. An "integrated" design was invented by Sam Rod in 1996. Rod created a "pod" that would hold two cameras and attach easily to a CMO-type stereomicroscope.

TrueVision Systems, Inc., converted Rod's pod into a single stereoscopic camera, one that contained two high-definition sensors (1920x1080 pixels for each eye), and was supplied as an "add-on" to ophthalmic and neurological operating microscopes. The fully digital stereo stream passed through a computer to a 3D display.

The next stage of digital video evolution is "4K", that is, to use sensors that have twice the number of pixels in the horizontal (roughly 4000) and in the vertical (roughly 2000). In order to pack all these pixels into sensors of the same size (so that the microscope does not need to grow in size and weight), the pixel dimensions must shrink by a factor of two. The sensors that we typically use have pixels that are 1.55 micrometers square. This puts extra demands on the optics.

Because of diffraction, any optical system is limited in resolution by its f-number (focal length divided by entrance pupil diameter) or by its numerical aperture, no matter how good the quality of the lens design. One way to describe this limitation is to calculate the "limiting spot size", that is, the image of an infinitely small point of light in the object field. In order to maximize the potential of a 4K sensor, the spot size should be not be bigger than the pixel size. If it is, then we say the microscope has "empty magnification".

I will show that, in a typical CMO microscope at its highest magnification, the spot size is about 18 micrometers, many times the sensor pixel size. Similar results obtain for the Greenough design.

What to do? In order to build an optical system that will support the very small pixels of 4K (and beyond!) sensors, we need to decrease the focal length, increase the aperture, or increase the numerical aperture. I will explain why it is very difficult to do this for a useable stereomicroscope, and how this challenge is related to the "Sense of Depth" that we experience when viewing stereoscopic images.

Michael Weissman, PhD, founder of TrueVision Systems, Inc., is a technical visionary and entrepreneur with more than 40 years of R&D experience. As one of the world's leading stereoscopic experts, Weissman has been developing 3D stereoscopic video systems for more than 25 years. His 3D systems have traveled two miles under the sea, into radioactive waste sites, and into hospital operating rooms.

|

|

|

Relevant presentation in associated "3D Imaging and Applications" Electronic Imaging conference:

20:05: JIST-first:

Application of photogrammetric 3D reconstruction to scanning electron microscopy: Considerations for volume analysis, William Rickard, Jéssica Fernanda Ramos Coelho, Joshua Hollick, Susannah Soon, and Andrew Woods, Curtin University (Australia) [3DIA-102]

|

EI Plenary

Session Chair: Jonathan B. Phillips, Google Inc. (United States) |

10:00 - 11:10

|

|

The Development of Integral Color Image Sensors and Cameras

Kenneth A. Parulski,

Expert Consultant: Mobile Imaging (United States)

Over the last three decades, integral color image sensors have revolutionized all forms of color imaging. Billions of these sensors are used each year in a wide variety of products, including smart phones, webcams, digital cinema cameras, automobiles, and drones. Kodak Research Labs pioneered the development of color image sensors and single-sensor color cameras in the mid-1970s. A team led by Peter Dillon invented integral color sensors along with the image processing circuits needed to convert the color mosaic samples into a full color image. They developed processes to coat color mosaic filters during the wafer fabrication stage and invented the "Bayer" checkerboard pattern, which is widely used today. But the technology for fabricating color image sensors, and the algorithms used to process the mosaic color image data, has been continuously improving for almost 50 years. This talk describes early work by Kodak and other companies, as well as major technology advances and opportunities for the future.

Kenneth Parulski is an expert consultant to mobile imaging companies and leads the development of ISO standards for digital photography. He joined Kodak in 1980 after graduating from MIT and retired in 2012 as research fellow and chief scientist in Kodak's digital photography division. His work has been recognized with a Technical Emmy and other major awards. Parulski is a SMPTE fellow and an inventor on more than 225 US patents. |

|

|

Other Electronic Imaging Symposium events occurred across the day.

SESSION 7

Immersive Experiences

Moderator: Ian McDowall, Intuitive Surgical / Fakespace Labs (United States)

Session Chair: Margaret Dolinsky, Indiana University (United States)

|

Thu. 13:30 - 14:30 * |

This session is jointly sponsored by: The Engineering Reality of Virtual Reality 2021, and Stereoscopic Displays and Applications XXXII.

13:30: Interdisciplinary immersive experiences within artistic research, social and cognitive sciences, Adnan Hadzi, University of Malta (Malta) [ERVR-167]

14:10: Predicting VR discomfort, Vasilii Marshev, Jean-Louis de Bougrenet de la Tocnaye, and Vincent Nourrit, IMT Atlantique Bretagne-Pays de la Loire - Campus de Brest (France) [ERVR-168]

| Session Break |

14:30 - 18:00 |

SESSION 8

VR and 3D Applications

Moderator: Margaret Dolinsky, Indiana University (United States)

Session Chair: Ian McDowall, Intuitive Surgical / Fakespace Labs (United States)

|

Thu. 18:15 - 19:15 * |

This session is jointly sponsored by: The Engineering Reality of Virtual Reality 2021, and Stereoscopic Displays and Applications XXXII.

18:15:

Situational awareness of COVID pandemic data using virtual reality, Sharad Sharma, Bowie State University (United States) [ERVR-177]

18:35: Virtual reality instructional (VRI) module for training and patient safety, Sharad Sharma, Bowie State University (United States) [ERVR-178]

18:55: Server-aided 3D DICOM viewer for mobile platforms, Menghe Zhang and Jürgen Schulze, University of California San Diego (United States) [ERVR-179]

|

EI Plenary

Session Chair: Jonathan B. Phillips, Google Inc. (United States) |

10:00 - 11:10

|

|

Making Invisible Visible

Ramesh Raskar,

Ramesh Raskar,

Associate Professor, MIT Media Lab

The invention of X-ray imaging enabled us to see inside our bodies. The invention of thermal infrared imaging enabled us to depict heat. So, over the last few centuries, the key to making the invisible visible was recording with new slices of electromagnetic spectrum. But the impossible photos of tomorrow won't be recorded; they'll be computed. Ramesh Raskar's group has pioneered the field of femto-photography, which uses a high-speed camera that enables visualizing the world at nearly a trillion frames per second so that we can create slow-motion movies of light in flight. These techniques enable the seemingly impossible: seeing around corners, seeing through fog as if it were a sunny day, and detecting circulating tumor cells with a device resembling a blood pressure cuff. Raskar and his colleagues in the Camera Culture Group at the MIT Media Lab have advanced fundamental techniques and have pioneered new imaging and computer vision applications. Their work centers on the co-design of novel imaging hardware and machine learning algorithms, including techniques for the automated design of deep neural networks. Many of Raskar's projects address healthcare, such as EyeNetra, a start-up that extends the capabilities of smart phones to enable low-cost eye exams. In his plenary talk, Raskar shares highlights of his group's work, and his unique perspective on the future of imaging, machine learning, and computer vision.

Ramesh Raskar is an associate professor at MIT Media Lab and directs the Camera Culture research group. His focus is on AI and imaging for health and sustainability. They span research in physical (e.g., sensors, health-tech), digital (e.g., automated and privacy-aware machine learning), and global (e.g., geomaps, autonomous mobility) domains. He received the Lemelson Award (2016), ACM SIGGRAPH Achievement Award (2017), DARPA Young Faculty Award (2009), Alfred P. Sloan Research Fellowship (2009), TR100 Award from MIT Technology Review (2004), and Global Indus Technovator Award (2003). He has worked on special research projects at Google [X] and Facebook and co-founded/advised several companies. |

|

|

EI Plenary

Session Chair: Radka Tezaur, Intel Corporation (United States) |

10:00 - 11:10

|

|

Revealing the Invisible to Machines with Neuromorphic Vision Systems: Technology and Applications Overview

Luca Verre,

Luca Verre,

CEO and Co-Founder at PROPHESEE, Paris, France

Since their inception 150 years ago, all conventional video tools have represented motion by capturing a number of still frames each second. Displayed rapidly, such images create an illusion of continuous movement. From the flip book to the movie camera, the illusion became more convincing but its basic structure never really changed. For a computer, this representation of motion is of little use. The camera is blind between each frame, losing information on moving objects. Even when the camera is recording, each of its "snapshot" images contains no information about the motion of elements in the scene. Worse still, within each image, the same irrelevant background objects are repeatedly recorded, generating excessive unhelpful data. Evolution developed an elegant solution so that natural vision never encounters these problems. It doesn't take frames. Cells in our eyes report back to the brain when they detect a change in the scene - an event. If nothing changes, the cell doesn't report anything. The more an object moves, the more our eye and brain sample it. This is the founding principle behind Event-Based Vision - independent receptors collecting all the essential information, and nothing else. Discover how a new bio-inspired machine vision category is transforming Industry 4.0, Consumer, and Automotive markets.

Luca Verre is co-founder and CEO of Prophesee, the inventor of the world's most advanced neuromorphic vision systems. Verre is a World Economic Forum technology pioneer. His experience includes project and product management, marketing, and business development roles at Schneider Electric. Prior to Schneider Electric, Verre worked as a research assistant in photonics at the Imperial College of London. Verre holds a MSc in physics, electronic and industrial engineering from Politecnico di Milano and Ecole Centrale and an MBA from Institut Européen d'Administration des Affaires, INSEAD. |

|

|

See other Electronic Imaging Symposium events at: www.ElectronicImaging.org

| |

|

|

[

Home]

[2021:

Program,

Committee,

Short Course,

Demonstration Session,

3D Theatre,

Sponsor,

Register ]

[

2026,

2025,

2024,

2023,

2022,

2021,

2020,

2019,

2018,

2017,

2016,

2015,

2014,

2013,

2012,

2011,

2010,

2009,

2008,

2007,

2006,

2005,

2004,

2003,

2002,

2001,

2000,

1999,

1998,

1997,

1996]

* Advertisers are not directly affiliated with or specifically endorsed by the Stereoscopic Displays and Applications conference.

Maintained by:

Andrew Woods

Revised:

5 February, 2024.

Ramesh Raskar,

Ramesh Raskar,  Luca Verre,

Luca Verre,